Low-Light Image and Video Enhancement

Using Deep Learning: A Survey

TPAMI 2021

Paper

Abstract

Low-light image enhancement (LLIE) aims at improving the perception or interpretability of an image captured in an environment with poor illumination. Recent advances in this area are dominated by deep learning-based solutions, where many learning strategies, network structures, loss functions, training data, etc. have been employed.

In this paper, we provide a comprehensive survey to cover various aspects ranging from algorithm taxonomy to unsolved open issues. To examine the generalization of existing methods, we propose a low-light image and video (LLIV) dataset, in which the images and videos are taken by different mobile phones' cameras under diverse illumination conditions. Besides, for the first time, we provide a unified online platform that covers many popular LLIE methods, of which the results can be produced through a user-friendly web interface. In addition to qualitative and quantitative evaluation of existing methods on publicly available and our proposed datasets, we also validate their performance in face detection in the dark.

This survey together with the proposed dataset and online platform could serve as a reference source for future study and promote the development of this research field. The proposed platform and dataset as well as the collected methods, datasets, and evaluation metrics are publicly available and will be regularly updated.

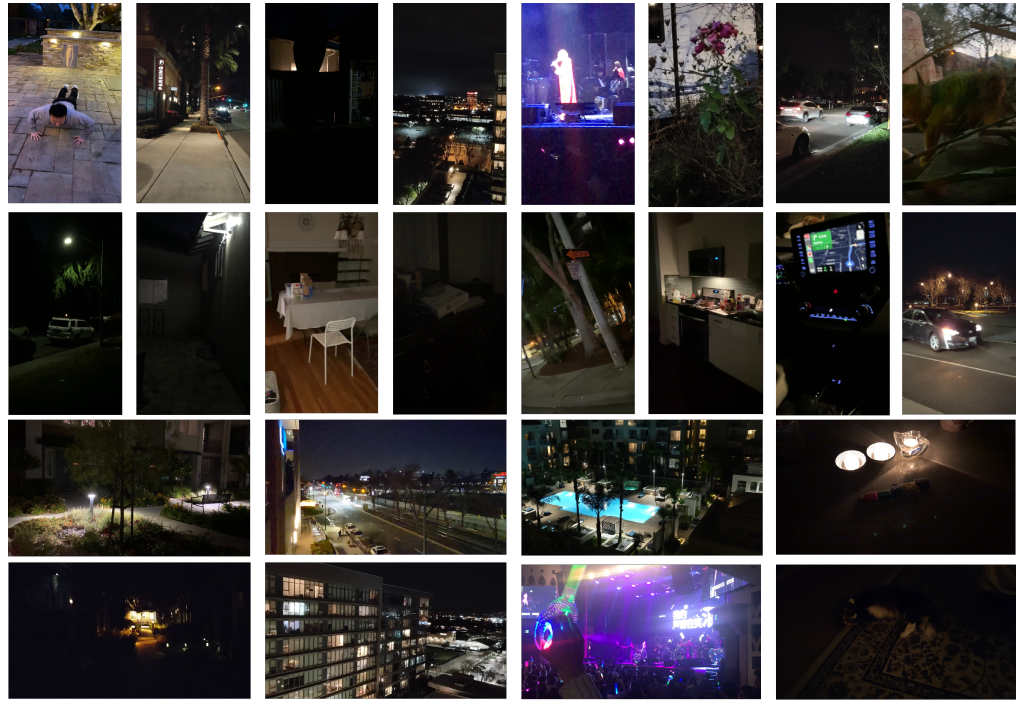

Examples of images taken under sub-optimal lighting conditions. These images suffer from the buried scene content, reduced contrast, boosted noise, and inaccurate color.

LLIE

Methods

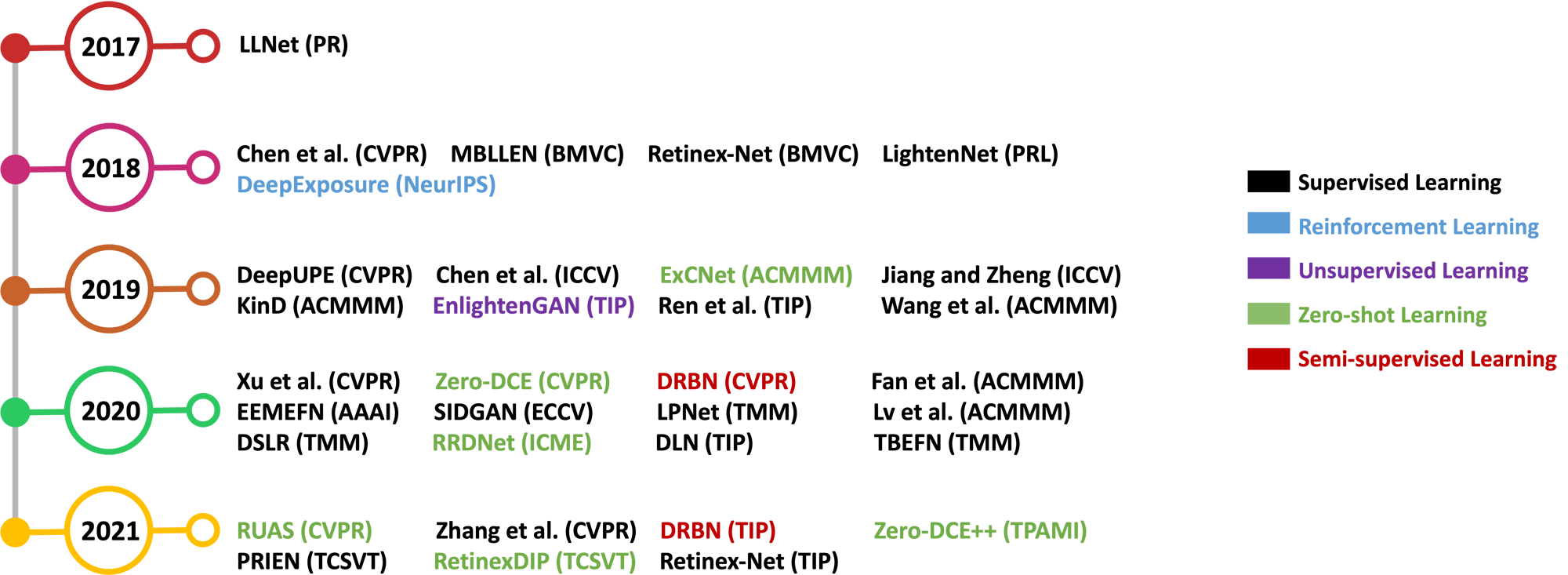

A concise milestone of deep learning-based low-light image and video enhancement methods. Since 2017, the number of deep learning-based solutions has grown year by year. Learning strategies used in these solutions cover Supervised Learning, Reinforcement Learning, Unsupervised Learning, Zero-Shot Learning, and Semi-Supervised Learning.

Dataset

LLIV-Phone

We propose a Low-Light Image and Video dataset, called LLIV-Phone, to comprehensively and thoroughly validate the performance of LLIE methods. LLIV-Phone is the largest and most challenging real-world testing dataset of its kind. In particular, the dataset contains 120 videos (45,148 images) taken by 18 different mobile phones' cameras including iPhone 6s, iPhone 7, iPhone7 Plus, iPhone8 Plus, iPhone 11, iPhone 11 Pro, iPhone XS, iPhone XR, iPhone SE, Xiaomi Mi 9, Xiaomi Mi Mix 3, Pixel 3, Pixel 4, Oppo R17, Vivo Nex, LG M322, OnePlus 5T, Huawei Mate 20 Pro under diverse illumination conditions (e.g., weak lighting, underexposure, moonlight, twilight, dark, extremely dark, back-lit, non-uniform light, and colored light.) in both indoor and outdoor scenes.

Online

LLIE-Platform

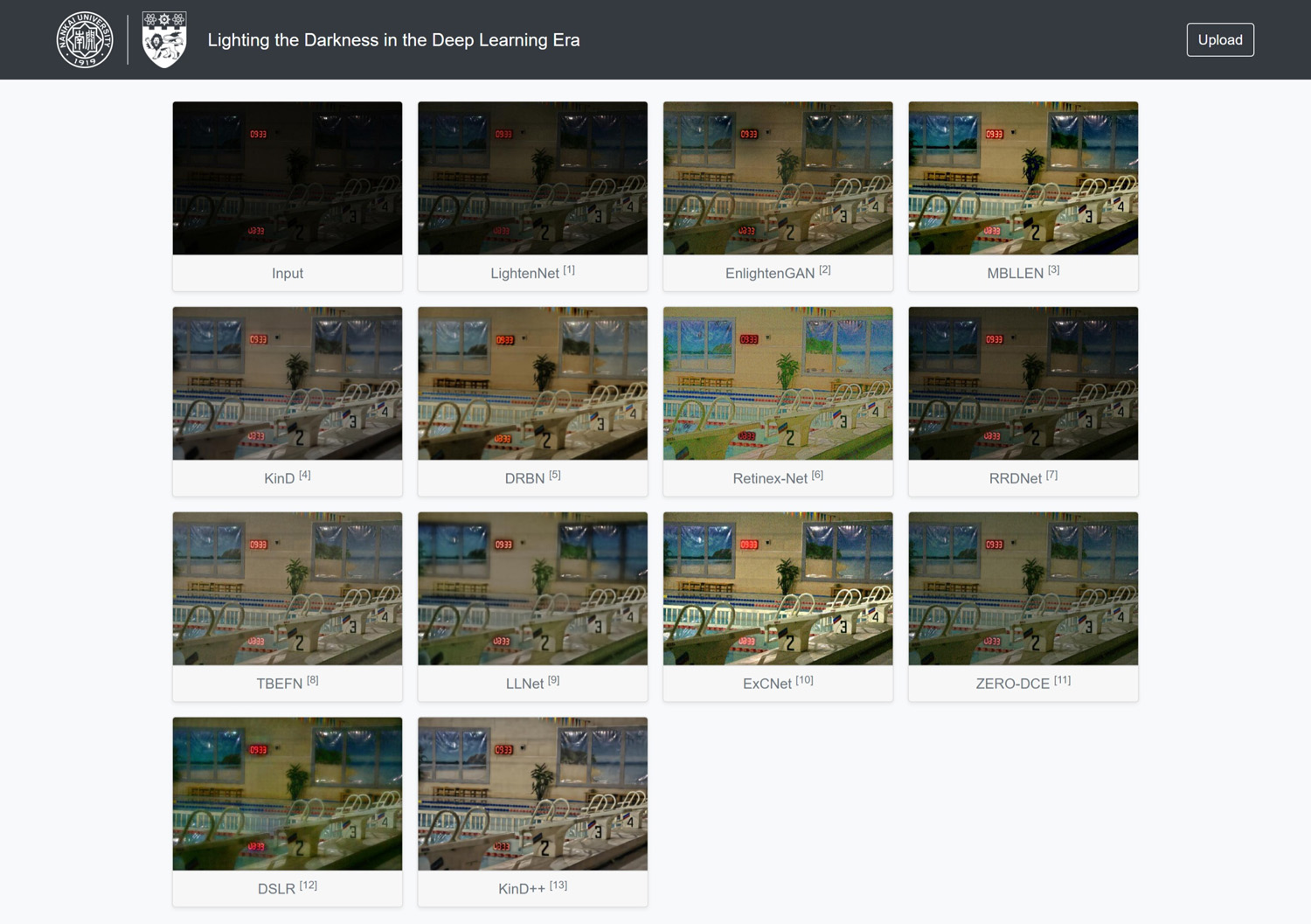

Different deep models may be implemented in different platforms such as Caffe, Theano, TensorFlow, and PyTorch. As a result, different algorithms demand different configurations, GPU versions, and hardware specifications. Such requirements are prohibitive to many researchers, especially for beginners who are new to this area and may not even have GPU resources. To resolve these problems, we develop an online LLIE-Platform.

To the date of this submission, the LLIE-Platform covers 14 popular deep learning-based LLIE methods including LLNet, LightenNet, Retinex-Net, EnlightenGAN, MBLLEN, KinD, KinD++, TBEFN, DSLR, DRBN, ExCNet, Zero-DCE, Zero-DCE++, and RRDNet, where the results of any input can be produced through a user-friendly web interface.

Related

Projects

-

Learning to Enhance Low-Light Image via Zero-Reference Deep Curve Estimation

C. Li*, C. Guo*, C. C. Loy

IEEE Transactions on Pattern Analysis and Machine Intelligence, 2021 (TPAMI)

[DOI] [arXiv] [Project Page] -

Zero-Reference Deep Curve Estimation for Low-Light Image Enhancement

C. Guo*, C. Li*, J. Guo, C. C. Loy, J. Hou, S. Kwong, R. Cong

in Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, 2020 (CVPR)

[PDF] [arXiv] [Supplementary Material] [Project Page]