About

MMLab@NTU

MMLab@NTU was formed on the 1 August 2018, with a research focus on computer vision and deep learning. Its sister lab is MMLab@CUHK. It is now a group with three faculty members and more than 40 members including research fellows, research assistants, research engineers and PhD students.

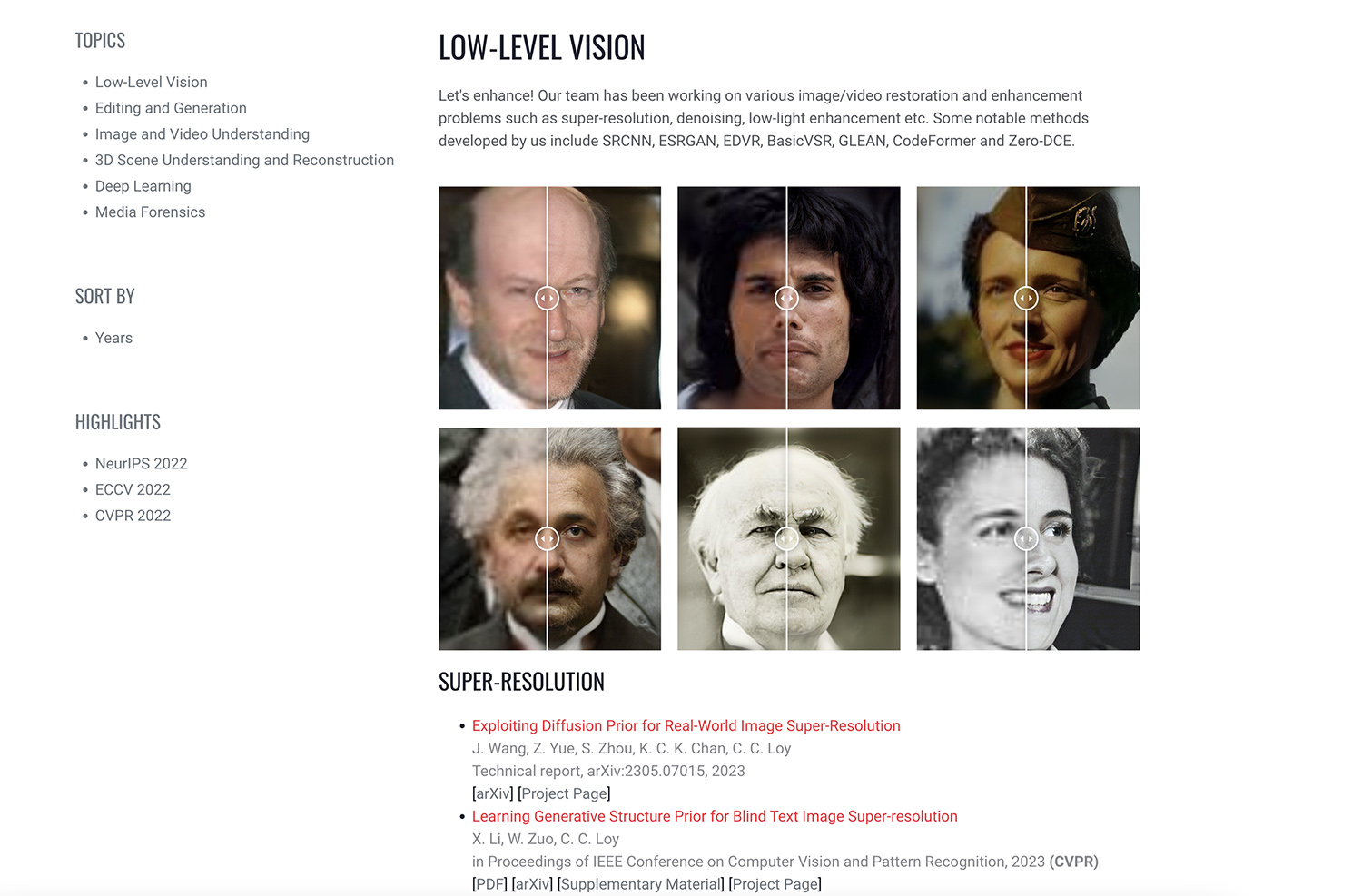

Members in MMLab@NTU conduct research primarily in low-level vision, image and video understanding, and generative AI. Have a look at the overview of our research. All publications are listed here.

We are always looking for motivated PhD students, postdocs, research assistants who have the same interests like us. Check out the careers page and follow us on Twitter.

In Memoriam: Xiaoou Tang

05/2024: The Editors-in-Chief of the International Journal of Computer Vision (IJCV) are deeply saddened by the significant loss of Xiaoou Tang, a former Editor-in-Chief of IJCV, who passed away on 15 December 2023 at the age of 55.

Asian Young Scientist Fellowship

08/2024: Ziwei Liu is awarded the Asian Young Scientist Fellowship. The AYSF committee acknowledges his contribution to the development of AI-driven mixed reality and would like to support his exploration in the direction of high-quality reconstruction for virtual/mixed reality toward advancing AI theories, algorithms, and systems for holistic perceptions.

CVPR 2025

02/2025: The team has a total of 20 papers (including 2 orals and 3 highlights) accepted to CVPR 2025.

Singapore Open Research Award 2024

11/2024: Congrats to the team! The Singapore Open Research Awards 2024 aims to recognise and reward Singapore researchers who have used open research to make their scientific contents, tools, and processes open, accessible, transparent and reproducible, or integrated it in their practice.

Check Out

News and Highlights

- 02/2025: Yushi Lan and Zhaoxi Chen received Outstanding Prize from the Meshy Fellowship Program 2025. Congrats!

- 11/2024: Siyao Li is awarded the very competitive and prestigious Google PhD Fellowship 2024 under the area “Machine Intelligence”. Congrats!

- 11/2024: Professor Chen Change Loy was awarded the Nanyang Research Award in recognition of his significant breakthroughs and global impact in the field of visual content enhancement, image restoration, and video restoration.

- 11/2024: SRCNN was awarded the Test of Time Award by Technical Committee on Computer Vision of China Computer Federation (CCF-CV).

- 10/2024: Congratulations to Fangzhou Hong, Jingkang Yang, Ziqi Huang for being selected as outstanding reviewers of ECCV 2024.

- 06/2024: Congratulations to Jianyi Wang and Jingkang Yang for being selected as outstanding reviewers of CVPR 2024.

- 03/2024: The team has a total of 15 papers accepted to CVPR 2024.

Recent

Projects

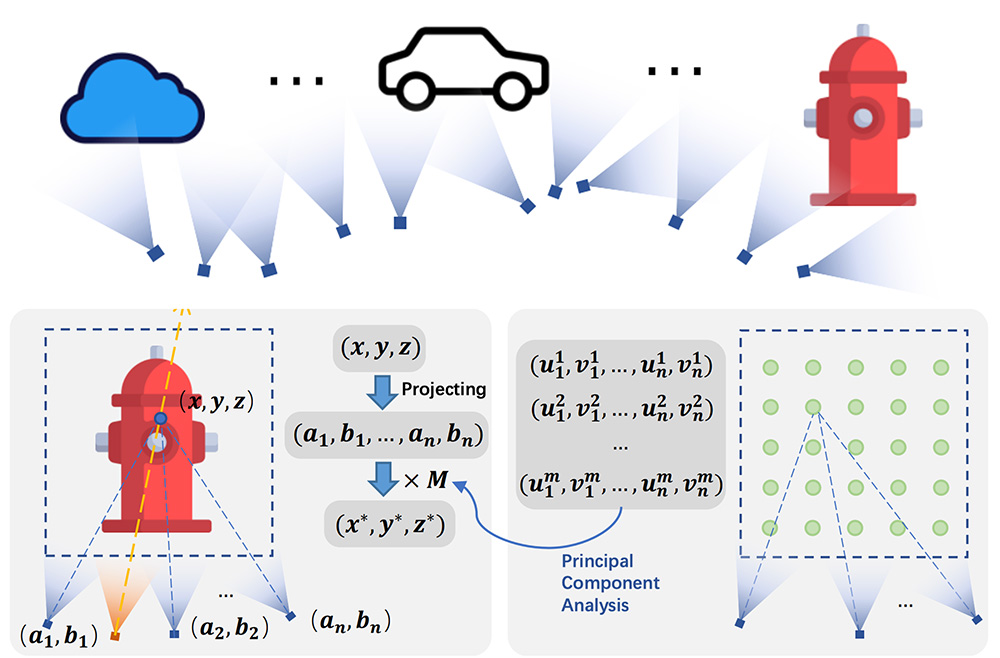

OmniObject3D: Large-Vocabulary 3D Object Dataset for Realistic Perception, Reconstruction and Generation

T. Wu, J. Zhang, X. Fu, Y. Wang, J. Ren, L. Pan, W. Wu, L. Yang, J. Wang, C. Qian, D. Lin, Z. Liu

in Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, 2023 (CVPR, Award Candidate)

[PDF]

[arXiv]

[Supplementary Material]

[Project Page]

We propose OmniObject3D, a large vocabulary 3D object dataset with massive high-quality real-scanned 3D objects to facilitate the development of 3D perception, reconstruction, and generation in the real world. OmniObject3D comprises 6,000 scanned objects in 190 daily categories, sharing common classes with popular 2D datasets (e.g., ImageNet and LVIS), benefiting the pursuit of generalizable 3D representations.

F2-NeRF: Fast Neural Radiance Field Training with Free Camera Trajectories

P. Wang, Y. Liu, Z. Chen, L. Liu, Z. Liu, T. Komura, C. Theobalt, W. Wang

in Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, 2023 (CVPR, Highlight)

[PDF]

[arXiv]

[Supplementary Material]

[Project Page]

This paper presents a novel grid-based NeRF called F2-NeRF (Fast-Free-NeRF) for novel view synthesis, which enables arbitrary input camera trajectories and only costs a few minutes for training. Existing two widely-used space-warping methods are only designed for the forward-facing trajectory or the 360 degree object-centric trajectory but cannot process arbitrary trajectories. In this paper, we delve deep into the mechanism of space warping to handle unbounded scenes.

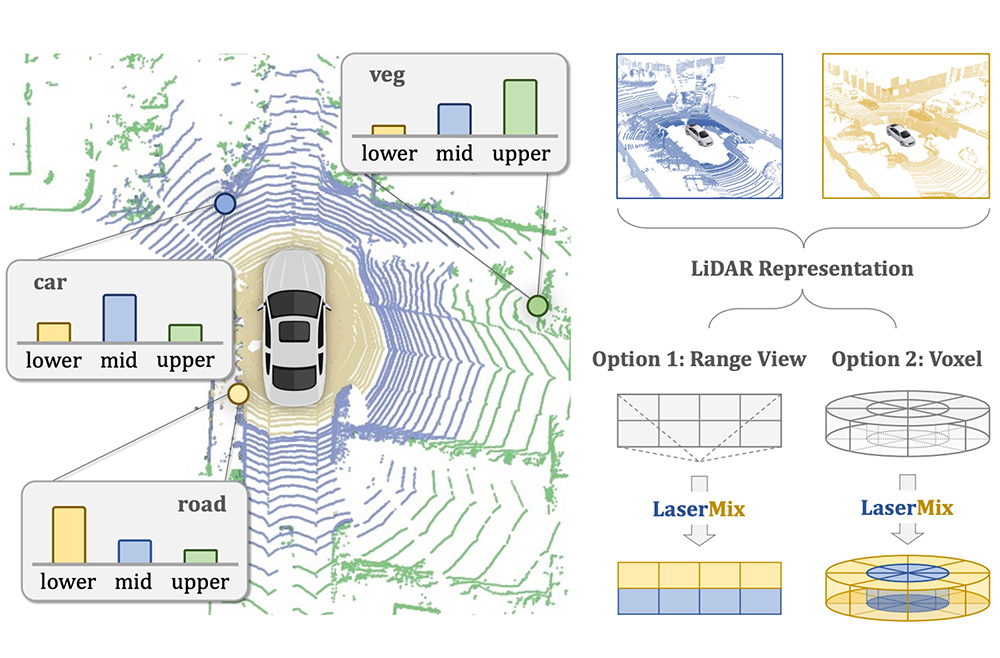

LaserMix for Semi-Supervised LiDAR Semantic Segmentation

L. Kong, J. Ren, L. Pan, Z. Liu

in Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, 2023 (CVPR, Highlight)

[PDF]

[arXiv]

[Supplementary Material]

[Project Page]

We study the underexplored semi-supervised learning problem in LiDAR segmentation. Our core idea is to leverage the strong spatial cues of LiDAR point clouds to better exploit unlabeled data. We propose LaserMix to mix laser beams from different LiDAR scans, and then encourage the model to make consistent and confident predictions before and after mixing.

Nighttime Smartphone Reflective Flare Removal using Optical Center Symmetry Prior

Y. Dai, Y. Luo, S. Zhou, C. Li, C. C. Loy

in Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, 2023 (CVPR, Highlight)

[PDF]

[arXiv]

[Supplementary Material]

[Project Page]

Reflective flare is a phenomenon that occurs when light reflects inside lenses, causing bright spots or a “ghosting effect” in photos. we propose an optical center symmetry prior, which suggests that the reflective flare and light source are always symmetrical around the lens’s optical center. This prior helps to locate the reflective flare’s proposal region more accurately and can be applied to most smartphone cameras.