FASA: Feature Augmentation and Sampling Adaptation for Long-Tailed Instance Segmentation

ICCV 2021

Paper

Abstract

Recent methods for long-tailed instance segmentation still struggle on rare object classes with few training data. We propose a simple yet effective method, Feature Augmentation and Sampling Adaptation (FASA), that addresses the data scarcity issue by augmenting the feature space especially for rare classes. Both the Feature Augmentation (FA) and feature sampling components are adaptive to the actual training status --- FA is informed by the feature mean and variance of observed real samples from past iterations, and we sample the generated virtual features in a loss-adapted manner to avoid over-fitting.

FASA does not require any elaborate loss design, and removes the need for inter-class transfer learning that often involves large cost and manually-defined head/tail class groups. FASA is a fast, generic method that can be easily plugged into standard or long-tailed segmentation frameworks, with consistent performance gains and little added cost. FASA is also applicable to other tasks like long-tailed classification with state-of-the-art performance.

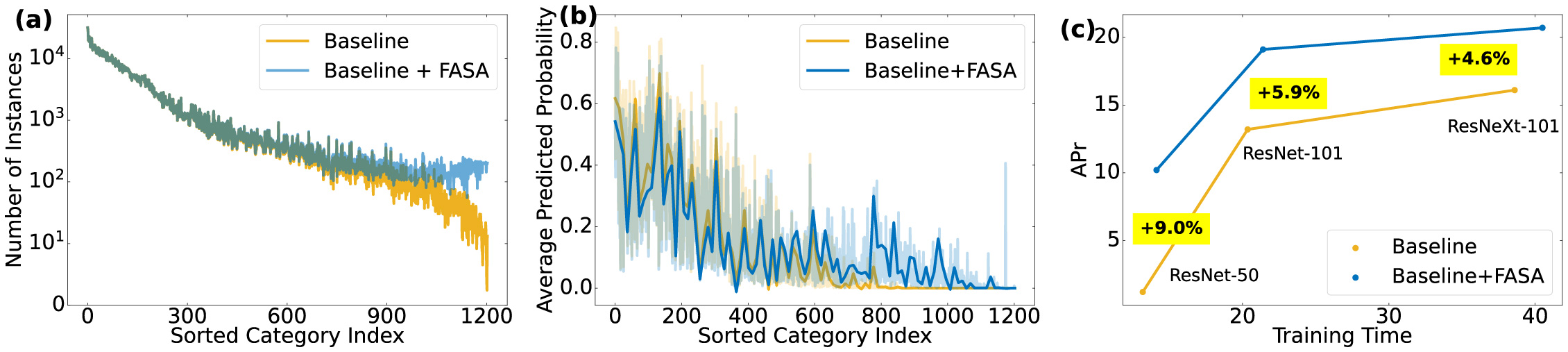

Class imbalance and the comparison of Mask R-CNN baseline with and without FASA on LVIS v1.0 dataset. (a) By adaptive feature augmentation and sampling, our method FASA largely alleviates the imbalance issue, especially for rare classes. (b) Compare the prediction results of FASA vs Mask R-CNN baseline regarding average category probability scores. The baseline model predicts near-zero scores for rare classes. While with FASA, rare-class scores are significantly boosted, which merits final performance. (c) FASA brings consistent improvements over different backbone models in mask APr defined on rare classes. Such gains come at a very low cost (training time only increases by 3% on average).

The Framework

FASA

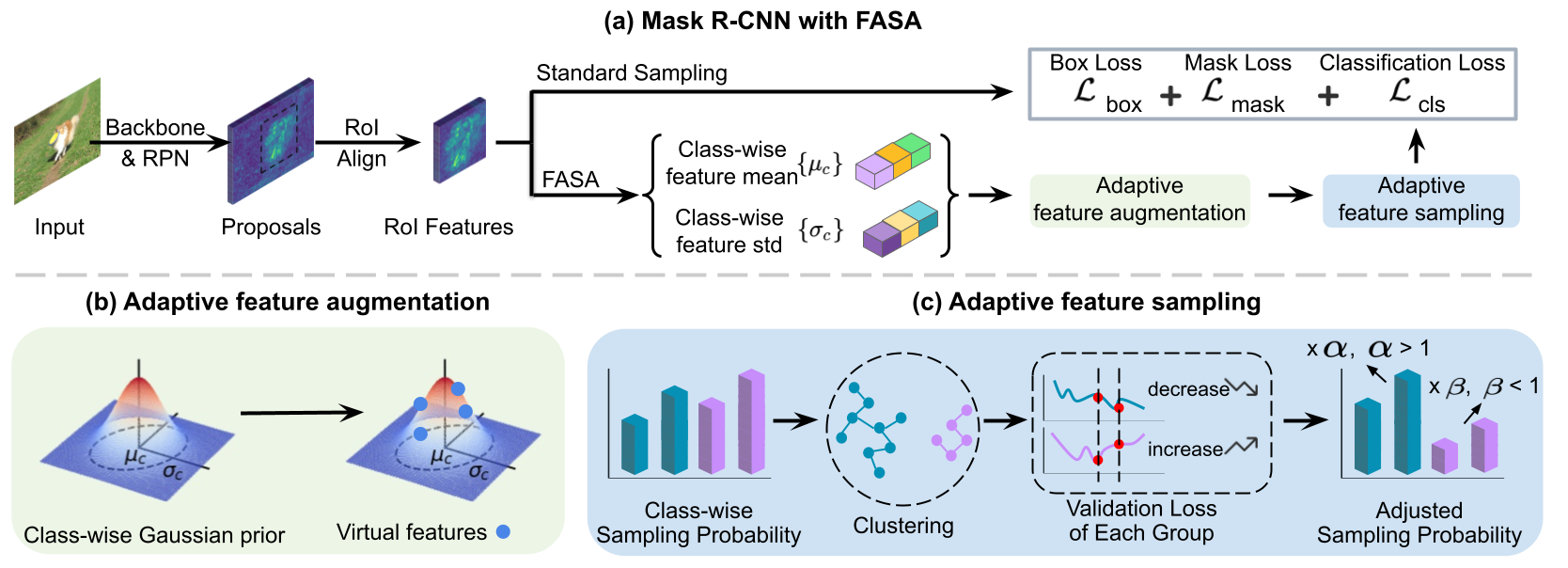

The proposed framework consists of two components:

- Adaptive Feature Augmentation (FA) that generates virtual features to augment the feature space of all classes (especially for rare classes)

- Adaptive Feature Sampling (FS) that dynamically adjusts the sampling probability of virtual features for each class

(a) The pipeline of Mask R-CNN combined with the proposed FASA, a standalone module that generates virtual features to augment the classification branch for better performance on long-tailed data. FASA maintains class-wise feature mean and variance online, followed by (b) adaptive feature augmentation and (c) adaptive feature sampling.

Experimental

Results

| Loss | Sampler | #Epoch | FASA | AP | APr | APc | APf |

|---|---|---|---|---|---|---|---|

| Softmax CE | Uniform | 24 | ✗ | 19.3 | 1.2 | 17.4 | 29.3 |

| ✓ | 22.6 | 10.2 | 21.6 | 29.2 | |||

| RFS | 24 | ✗ | 22.8 | 12.9 | 21.6 | 28.3 | |

| ✓ | 24.1 | 17.3 | 22.9 | 28.5 | |||

| EQL | Uniform | 24 | ✗ | 22.1 | 5.1 | 22.4 | 29.3 |

| ✓ | 24.4 | 15.4 | 23.5 | 29.4 | |||

| cRT | Uniform/RFS | 12+12 | ✗ | 22.4 | 12.2 | 20.4 | 29.1 |

| ✓ | 23.6 | 15.1 | 22.0 | 29.1 | |||

| BaGS | Uniform/RFS | 12+12 | ✗ | 22.8 | 12.4 | 22.2 | 28.3 |

| ✓ | 24.0 | 15.2 | 23.4 | 28.3 | |||

| Seesaw | RFS | 24 | ✗ | 26.4 | 19.6 | 26.1 | 29.8 |

| ✓ | 27.5 | 21.0 | 27.5 | 30.1 |

| Method | Loss | Sampler | Backbone | FASA | AP | APr | APc | APf |

|---|---|---|---|---|---|---|---|---|

| Mask R-CNN | Softmax CE | RFS | R101 | ✗ | 24.4 | 13.2 | 24.7 | 30.3 |

| ✓ | 26.3 | 19.1 | 25.4 | 30.6 | ||||

| Mask R-CNN | Softmax CE | RFS | X101 | ✗ | 26.1 | 16.1 | 24.9 | 32.0 |

| ✓ | 27.7 | 20.7 | 26.6 | 32.0 | ||||

| Cascade Mask R-CNN | Softmax CE | RFS | R101 | ✗ | 25.4 | 13.7 | 24.8 | 31.4 |

| ✓ | 27.7 | 19.8 | 27.3 | 31.6 | ||||

| Cascade Mask R-CNN | Seesaw | RFS | R101 | ✗ | 30.1 | 21.4 | 30.0 | 33.9 |

| ✓ | 31.5 | 24.1 | 31.9 | 34.9 |

Result

Visualization

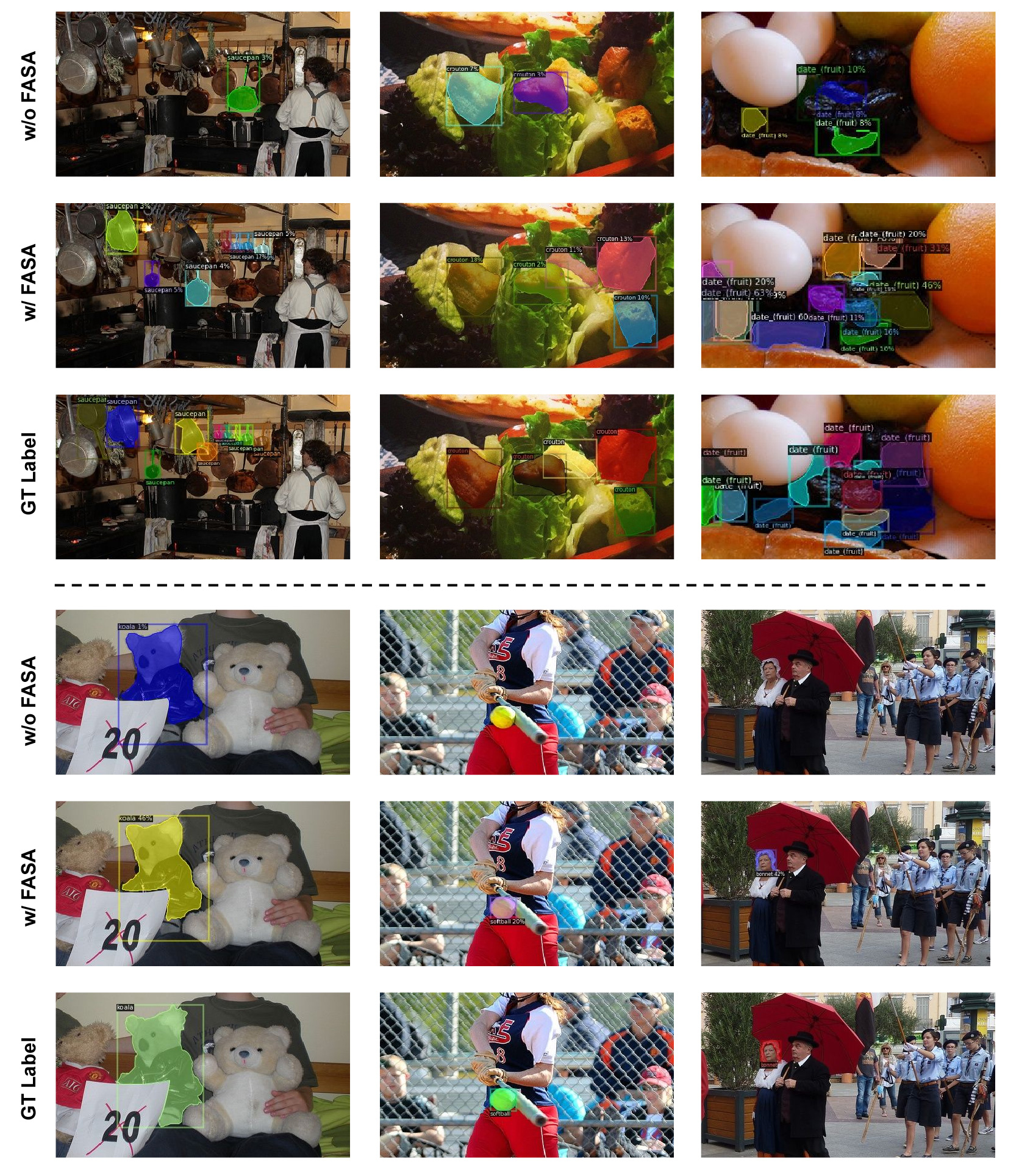

To better interpret the result, we show the segmentation results of selected rare classes. We observe that without FASA, the prediction scores for rare classes are small or even missed. On the contrary, with the help of our FASA, the classification results of the rare classes become accurate.

Prediction results of Mask R-CNN framework without and with FASA on the LVIS v1.0 validation set. We select six rare classes `saucepan', `crouton', `date (fruit)', `koala', `softball' and `bonnet' to visualize. With the help of FASA, Mask R-CNN yields more correct classification results than the baseline.

Paper

Citation

@InProceedings{zang2021fasa,

author = {Zang, Yuhang and Huang, Chen and Loy, Chen Change},

title = {FASA: Feature Augmentation and Sampling Adaptation for Long-Tailed Instance Segmentation},

booktitle = {Proceedings of IEEE/CVF International Conference on Computer Vision},

year = {2021}

}

Related

Projects

-

Open-Vocabulary DETR with Conditional Matching

Y. Zang, W. Li, K. Zhou, C. Huang, C. C. Loy

European Conference on Computer Vision, 2022 (ECCV, Oral)

[arXiv] [Project Page] -

Seesaw Loss for Long-Tailed Instance Segmentation

J. Wang, W. Zhang, Y. Zang, Y. Cao, J. Pang, T. Gong, K. Chen, Z. Liu, C. C. Loy, D. Lin

in Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, 2021 (CVPR)

[PDF] [Supplementary Material] [arXiv] -

Hybrid Task Cascade for Instance Segmentation

K. Chen, J. Pang, J. Wang, Y. Xiong, X. Li, S. Sun, W. Feng, Z. Liu, J. Shi, W. Ouyang, C. C. Loy, D. Lin

in Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, 2019 (CVPR)

[PDF] [arXiv] [Project Page]