Chen Change Loy is a President's Chair Professor with the College of Computing and Data Science, Nanyang Technological University, Singapore. He is the Lab Director of MMLab@NTU and Co-associate Director of S-Lab. He received his Ph.D. (2010) in Computer Science from the Queen Mary University of London. Prior to joining NTU, he served as a Research Assistant Professor at the MMLab of The Chinese University of Hong Kong, from 2013 to 2018.

His research interests include computer vision and deep learning with a focus on image/video restoration and enhancement, generative tasks, and representation learning. He served/serves as an Associate Editor of the International Journal of Computer Vision (IJCV), IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), and Computer Vision and Image Understanding (CVIU). He also serves/served as an Area Chair of top conferences such as ICCV, CVPR, ECCV, ICLR and NeurIPS. He will serve as the Program Co-Chair of CVPR 2026 and General Co-Chair of ACCV 2028.

MMLab@NTU

MMLab@NTU was formed on the 1 August 2018, with a research focus on computer vision and deep learning. Its sister lab is MMLab@CUHK. It is now a group with three faculty members and more than 40 members including research fellows, research assistants, and PhD students. Members in MMLab@NTU conduct research primarily in low-level vision, image and video understanding, creative content creation, 3D scene understanding and reconstruction.

Visit MMLab@NTU HomepageRecent

Papers

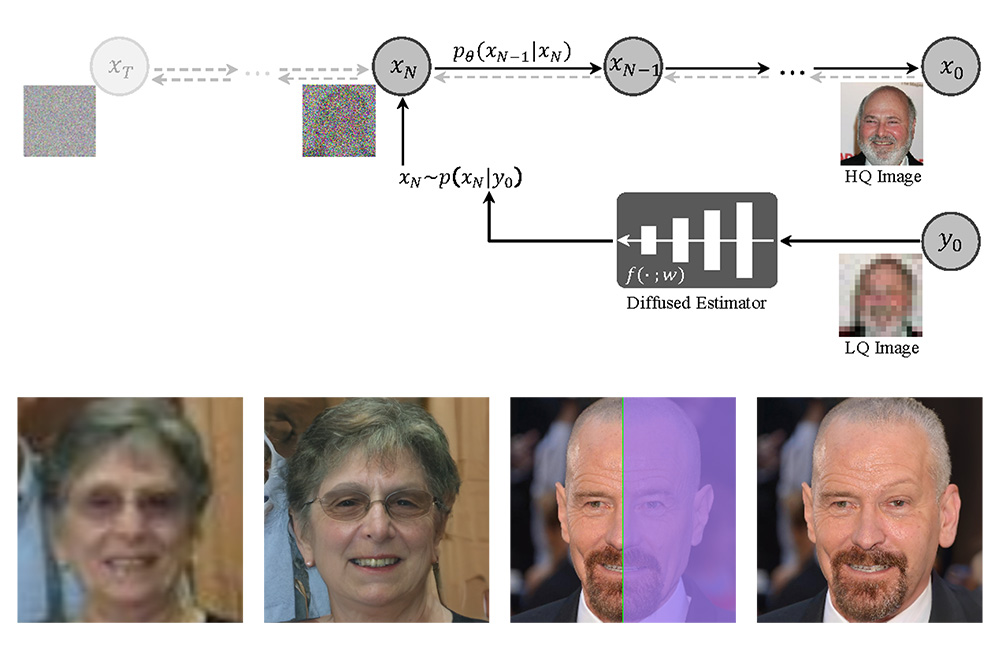

DifFace: Blind Face Restoration with Diffused Error Contraction

Z. Yue, C. C. Loy

IEEE Transactions on Pattern Analysis and Machine Intelligence, 2024 (TPAMI)

[arXiv]

[Project Page]

[Demo]

Deep learning-based methods for blind face restoration have shown great success but struggle with complex degradations outside their training data and require extensive loss design. DifFace addresses these issues by establishing a posterior distribution from low-quality to high-quality images using a pre-trained diffusion model, avoiding complicated loss designs and cumbersome training processes.

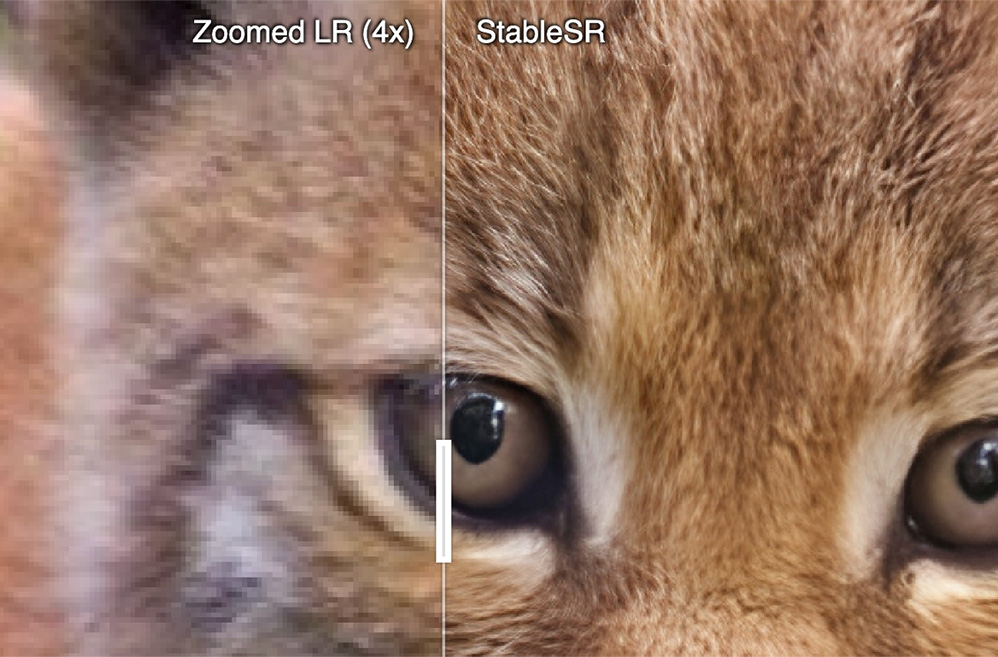

Exploiting Diffusion Prior for Real-World Image Super-Resolution

J. Wang, Z. Yue, S. Zhou, K. C. K. Chan, C. C. Loy

International Journal of Computer Vision, 2024 (IJCV)

[PDF]

[DOI]

[arXiv]

[Project Page]

We present a novel approach to leverage prior knowledge encapsulated in pre-trained text-to-image diffusion models for blind super-resolution (SR). By employing our time-aware encoder, we can achieve promising restoration results without altering the pre-trained synthesis model, preserving the generative prior and minimizing training cost.

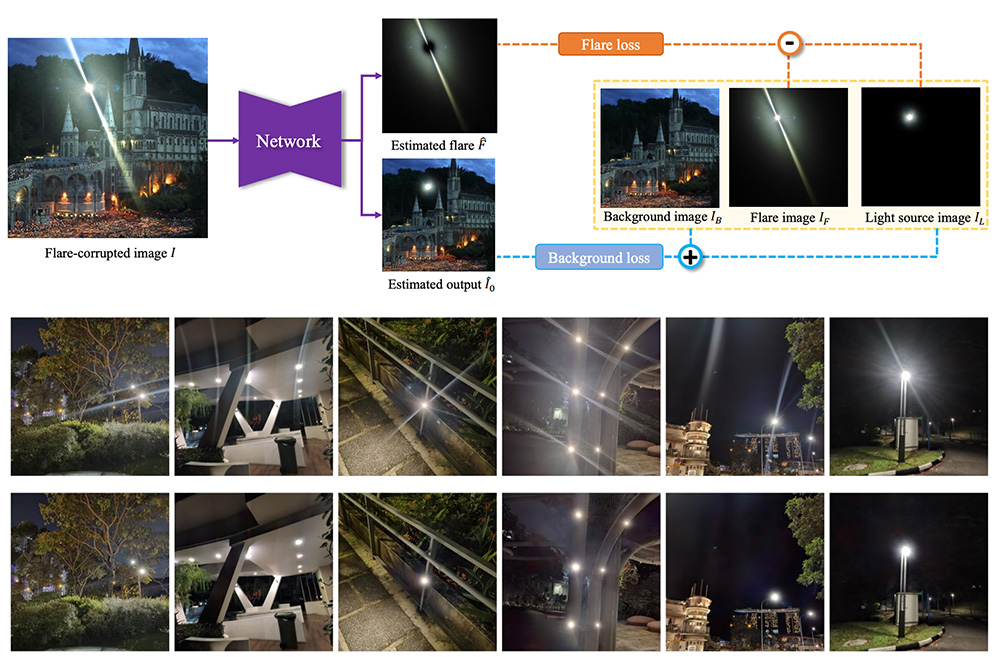

Flare7K++: Mixing Synthetic and Real Datasets for Nighttime Flare Removal and Beyond

Y. Dai, C. Li, S. Zhou, R. Feng, Y. Luo, C. C. Loy

IEEE Transactions on Pattern Analysis and Machine Intelligence, 2024 (TPAMI)

[arXiv]

[Project Page]

Artificial lights at night cause lens flare artifacts that degrade image quality and vision algorithm performance, with existing methods primarily addressing daytime flares. The newly introduced Flare7K++ dataset, consisting of 962 real and 7,000 synthetic flare images, capturing complex degradations around light sources, unlike previous datasets. This dataset, along with a new end-to-end pipeline that includes light source annotations, provides a robust foundation for future nighttime flare removal research and demonstrates significant improvements in real-world scenarios.

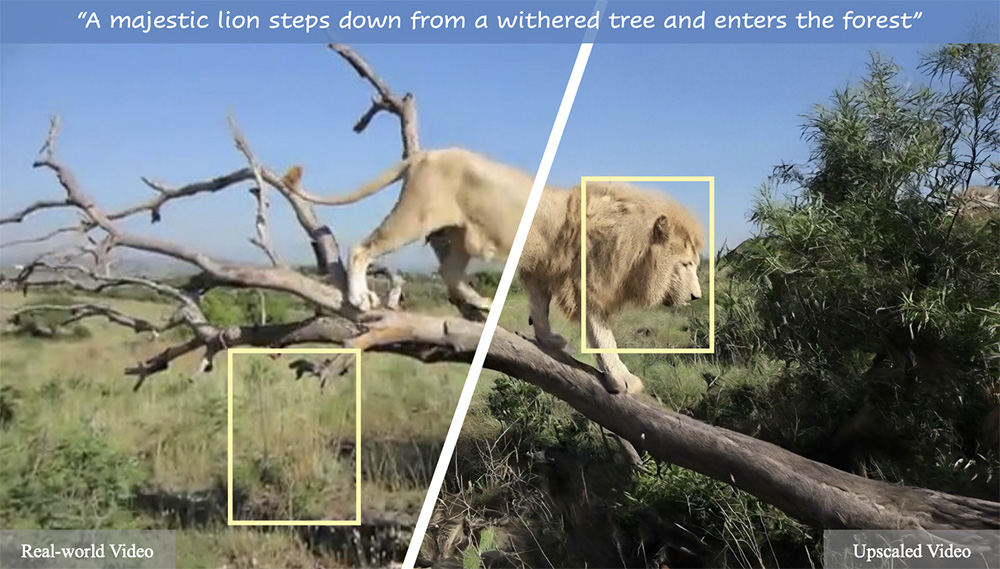

Upscale-A-Video: Temporal-Consistent Diffusion Model for Real-World Video Super-Resolution

S. Zhou, P. Yang, J. Wang, Y. Luo, C. C. Loy

in Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, 2024 (CVPR, Highlight)

[PDF]

[arXiv]

[Supplementary Material]

[Project Page]

[YouTube]

Text-based diffusion models have shown great potential for visual content generation but face challenges in video super-resolution due to the need for high fidelity and temporal consistency. Our study presents Upscale-A-Video, a text-guided latent diffusion framework that achieves temporal coherence locally by integrating temporal layers and globally with a flow-guided recurrent latent propagation module. This model balances restoration and generation, surpassing existing methods in both synthetic and real-world benchmarks, and demonstrating impressive visual realism and temporal consistency in AI-generated videos.

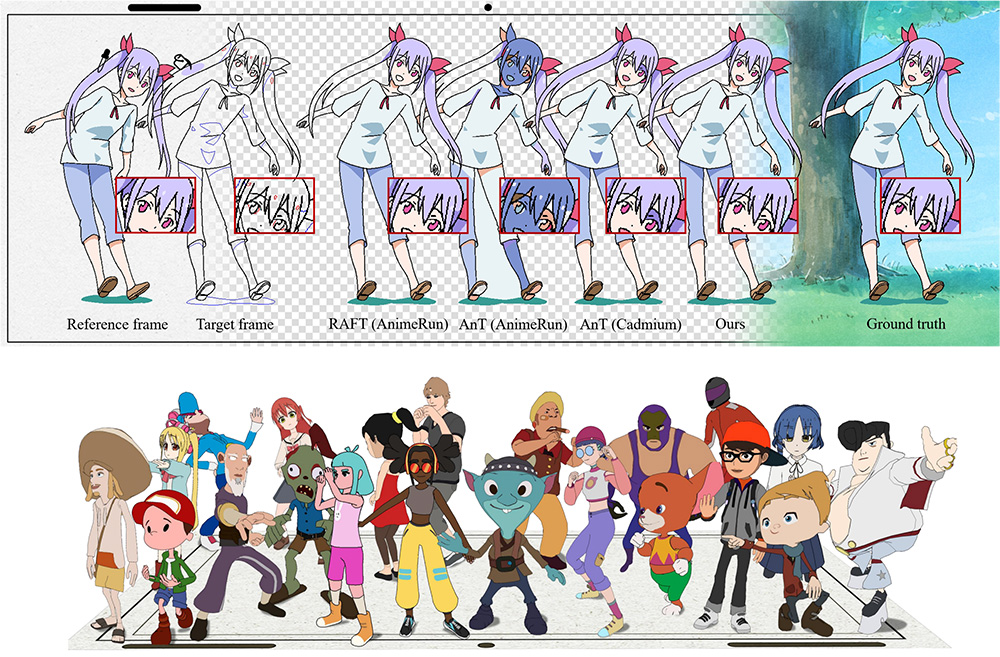

Learning Inclusion Matching for Animation Paint Bucket Colorization

Y. Dai, S. Zhou, Q. Li, C. Li, C. C. Loy

in Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, 2024 (CVPR)

[PDF]

[arXiv]

[Supplementary Material]

[Project Page]

[YouTube]

Current automated colorization methods often struggle with occlusion and wrinkles in animations due to their reliance on segment matching for color migration. We introduce a new learning-based inclusion matching pipeline that focuses on understanding the inclusion relationships between segments rather than just visual correspondences. Our method, supported by the PaintBucket-Character dataset, includes a two-stage estimation module for nuanced and accurate colorization, demonstrating superior performance over existing techniques in extensive experiments.